By using a neural network in conjunction with their own extension, the team at Skywaylab Studio was able to reduce their creatives’ production time from 20 to 2 days. Right now, the studio is focused on preparations for releasing its own tool for creating 2D animations to fit its new pipeline. Aleksey Emelyanov, one of Skywaylab’s founders, told us how that’s going to work.

Aleksey Emelyanov

How did it all start?

Everybody involved in animation knows that making creative content is a big and complex task that’s made up of tons of iterations. It can take two to three weeks to create a single animated video.

It takes at least six days to create a character alone. Of these days, the artist spends up to three days developing the art. And then, in order to correctly slice the character, create bones and controllers, and animate it, you need just as much time or sometimes even more.

Bearing in mind the procedural nature of our line of work (teams are constantly preparing new creatives for tests and experimenting with plots and how best to present them), we’ve been looking for a way to simplify this routine pipeline for as long as the studio has been around.

Last summer, when the hype around generative neural networks was just getting started, we immediately asked ourselves whether it would be possible to teach them our craft and create an AI-based tool that could take over the entire technical process (from drawing characters to animating them), and automate UI and post-processing at the same time.

So we conducted a few experiments and soon realized that yes, it is possible!

This lead us to launch two new divisions within Skywaylab — the neural network research department and the AI-based tool development department. We set them four tasks:

- To teach the neural network how to create art specifically in a given style.

- To teach the neural network to draw characters in separate parts (such as the head, neck, arms, and legs, as well as, if applicable, wings, tail, etc.).

- To teach the neural network how to rig a character autonomously.

- And finally, to teach the neural network how to animate a character in a given scenario.

Within a few months, we managed to create a product that can handle all of this. The very first test version cut down our creatives production time by 20%. Our new tool has boosted our current efficiency levels by 1000%: we used to spend 20 days producing one clip, but now it takes us a maximum of two days.

How does it work?

Our product is called Animart. It’s an artificial intelligence-based extension for Adobe After Effects (AE). We focused on integrating it organically into the AE workspace in order to preserve the familiarity of working with Adobe products.

Here’s how the work process goes for users:

- the designer installs the plug-in in AE;

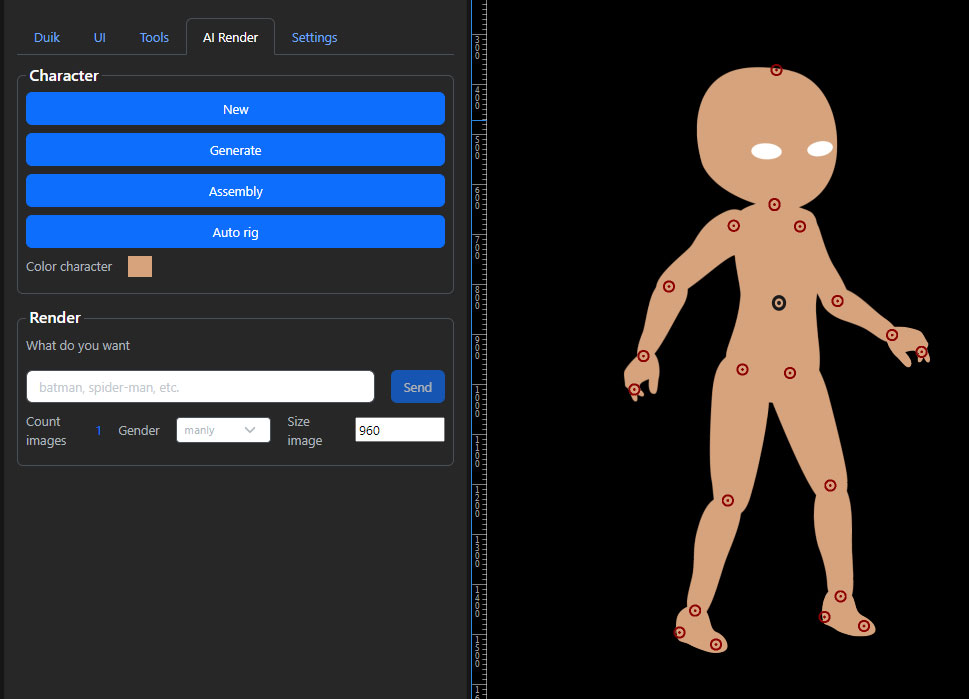

- the designer determines the future character’s proportions using the constructor;

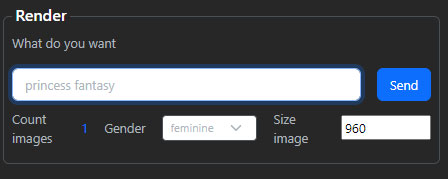

- to further help with visualization, the user describes the character with a prompt, for example: “a big, green, hairy bear wearing a yellow winter coat”;

- the request is sent to the Skywaylab server, where the neural network that has been taught our art style is located;

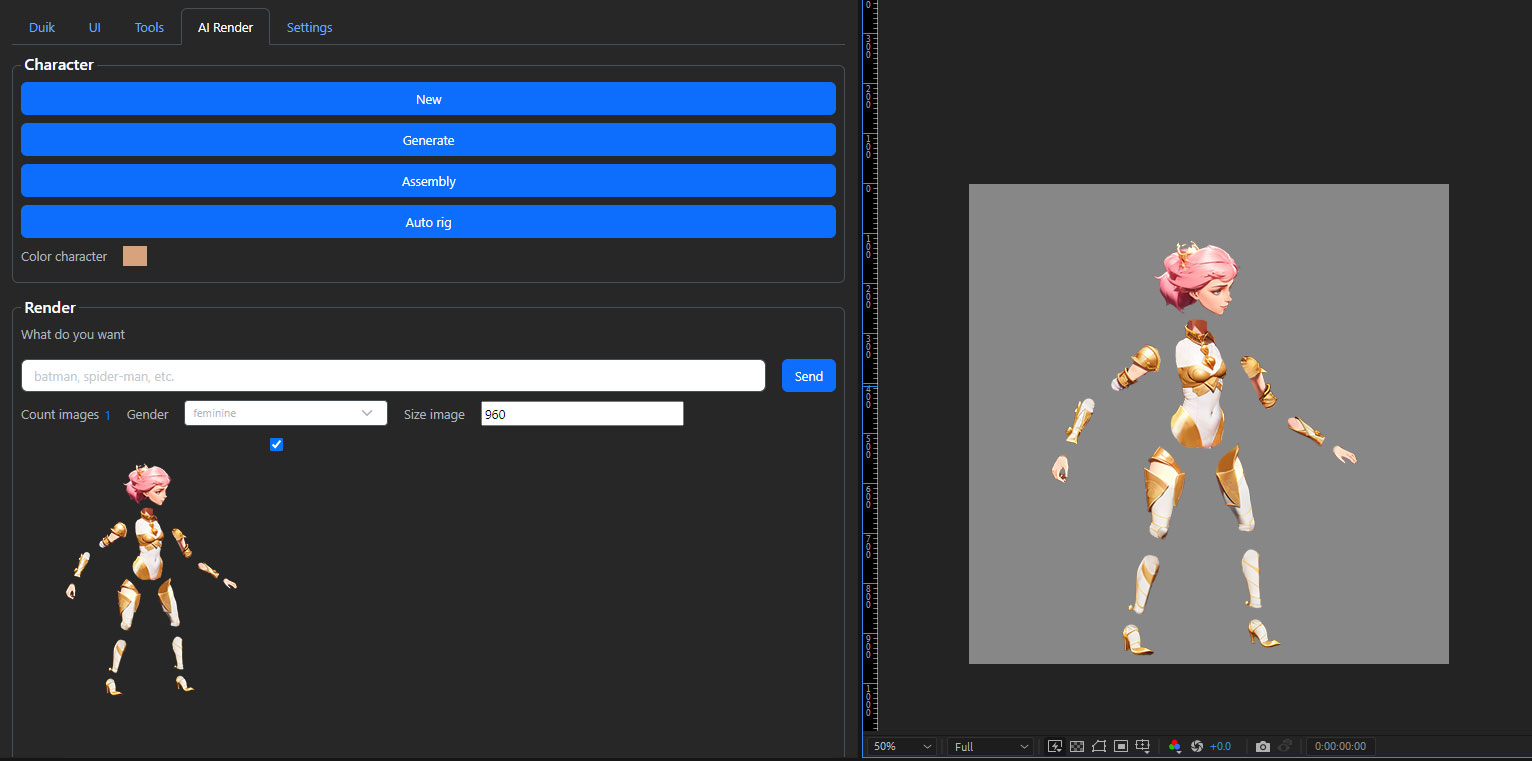

- a few seconds later, the user receives a generated and immediately disassembled character (you can get multiple options at once and pick out the most fitting result);

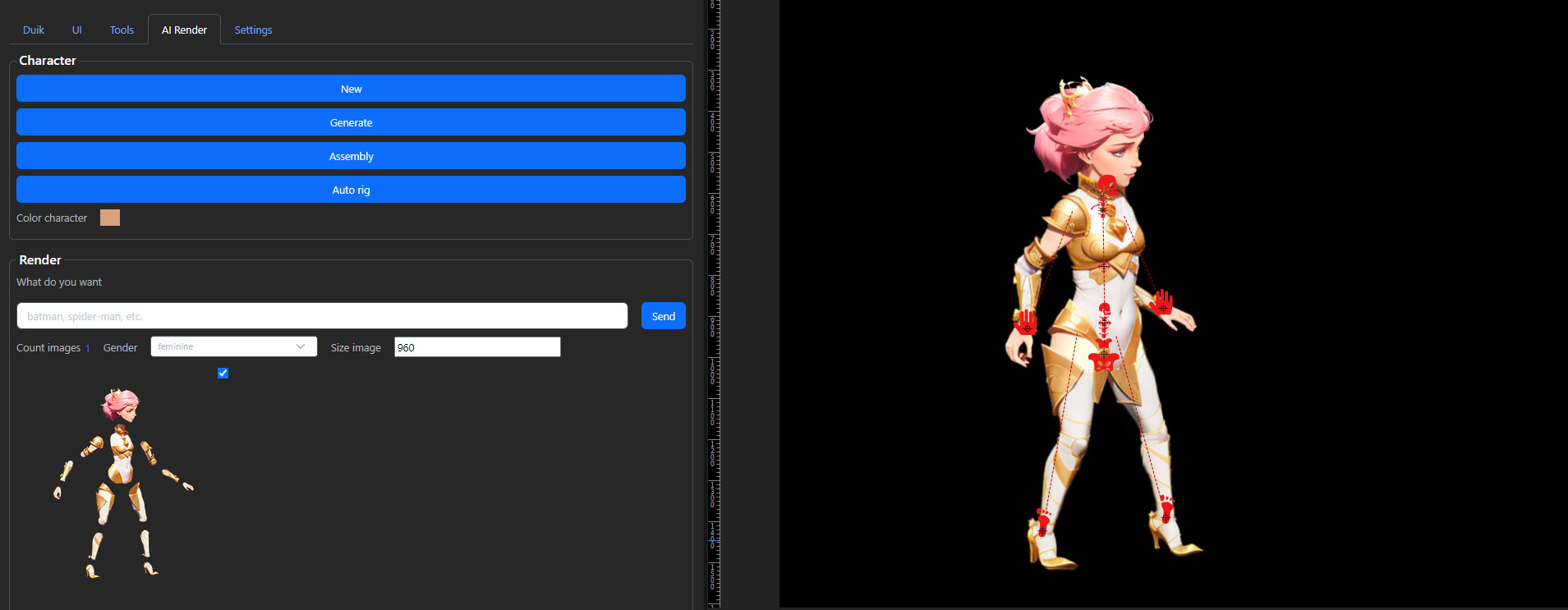

- the user presses the “Auto rig” button and gets a model that’s ready for animation;

- then, all that’s left is to pick out an animation from the Animart library (you can also add your own animations to this library);

- all the animations are applied to the character instantly, regardless of resolution, structure or shape;

- the final stage is sending the animations to render.

But this is only the beginning.

What’s next?

Here’s what we’re planning on adding to Animart in the foreseeable future:

- a 3D mannequin that will allow you to specify to the neural network from which angle to generate a 2D image of the character;

- a pseudo-3D camera that will allow you to dynamically change the visual angle of the character (with set keys for changing the angle, as if the camera is moving relative to the character along a given trajectory and at a given pace);

- a function that simulates multi-camera shooting with multiple characters interacting in a single scene;

- a function for animating backgrounds and surroundings;

- an animation store, where designers will be able to post and sell their own characters and animation presets, which will be categorized by keywords, ratings and popularity.

What about the team?

Before, to make sure we had enough time to put out 500 creative ads per month, we had to broaden our production team to 200 people.

But that doesn’t mean that we’ve reduced our number of designers after introducing Animart to our internal processes.

The volume of orders we’re receiving is snowballing, and our short-term goal is to produce 6000 creative projects per month.

With Animart, this no longer feels like a fantasy.