How to find a common language with AI and what part of the work can really be delegated to neural networks without loss of quality," Andrey Novikov, an artist at ZiMAD, told in his column for App2Top.

Andrey Novikov

When I first started working as a designer, there was much less software on the market. And its functionality was limited.

Today — everything is different. For example, some solutions allow you to minimize monotonous work.

There is a downside to this. The software itself is becoming more difficult to master. Therefore, there is a need to constantly learn, to master something.

Now in my work I mainly use a bunch of Photoshop with built-in AI and Stable Diffusion.

I started studying the latter with manuals on the web. A year ago, without having dealt with them, it was impossible to even install and configure a neural network (there were no ready-made packages). As a result, I even had to take a training course on working with Stable Diffusion.

Do I advise you to do the same?

Definitely. If there is an opportunity to learn from those who have already figured out the topic and are ready to share their experience, you need to use it. Courses can shorten the path in two weeks to two days — give all the appearances and passwords, the database itself, with which you can immediately start working with the software.

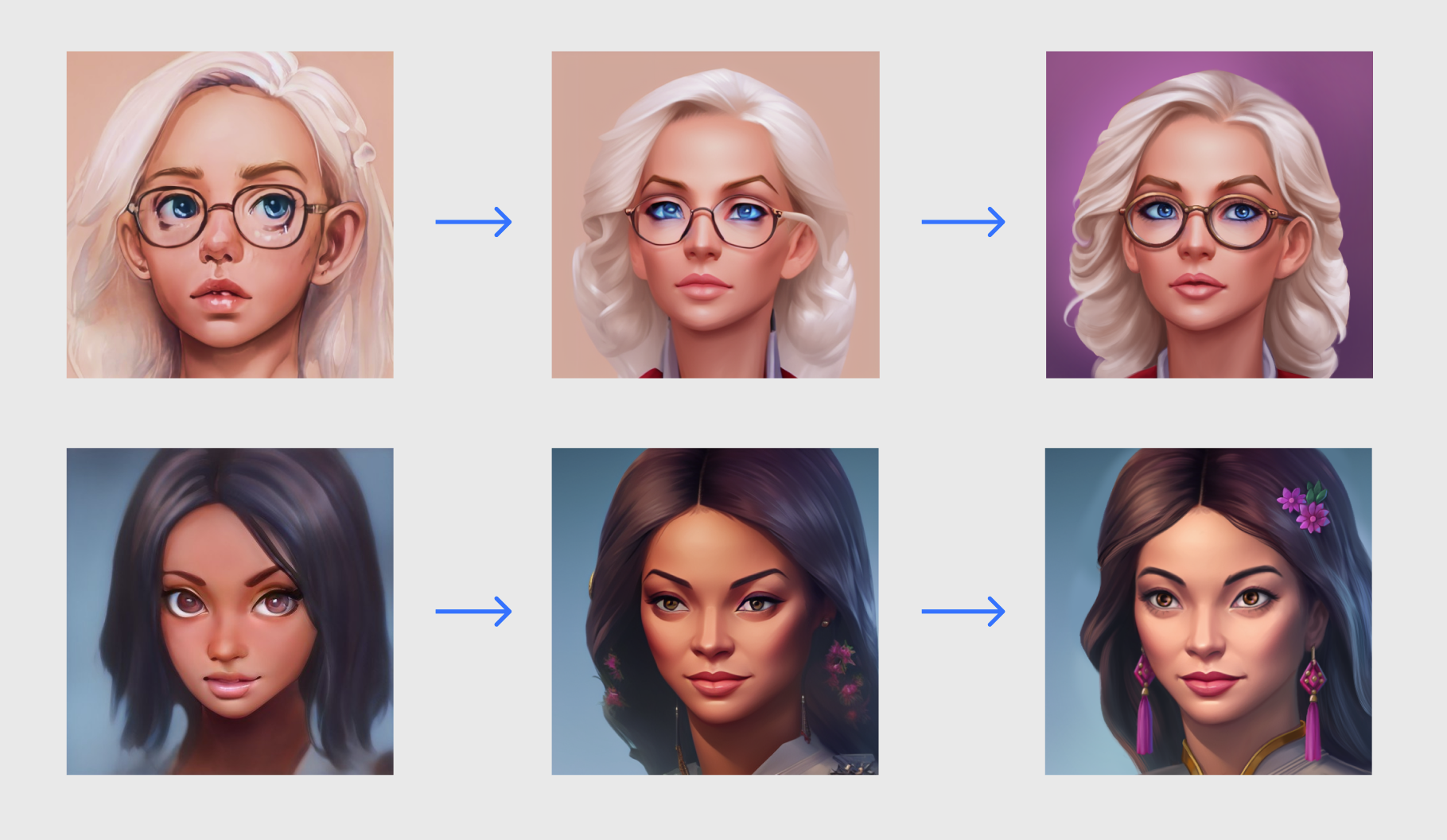

Setting up Stable Diffusion takes place locally on the computer — install Python, run the compiler, then get a link by clicking on which you open the web interface.

The feature of Stable Diffusion is the variety of settings. To work with it, you need to know which ones and how they work. These are not the usual Photoshop buttons, where you can guess the purpose of the tool from the icons.

But you can also work through other interfaces, it's a matter of choosing a specific user. Other interface options include Easy Diffusion, Vlad Diffusion, NMKD Stable Diffusion GUI.

The neural network gives the result almost instantly — this is not a render, in which you can have time to go to the kitchen for coffee and also exchange a couple of phrases with colleagues at the cooler. But in order to work quickly with AI, it is important to customize everything for yourself.

AI Training

Working with a neural network "on a roll" is the result of training.

For example, I needed the neural network to be able to generate an interior in a style that suits me. To do this, I did the following:

- I took images of one of the ZiMAD project (Puzzle Villa);

- I cut all the images into small parts so that there was an element of the interior in each fragment;

- I asked the neural network to describe what it sees in the images (at this stage it is important to assess the accuracy of the data and correct them as necessary; if this is not done, then the neural network will persistently generate erroneous generations during further work; for example, if it takes a chest of drawers for a person, then when requesting the generation of a chest of drawers it will give out a person);

- after training the neural network, he received the necessary style.

By the way, it can be transferred for use to colleagues to achieve maximum uniformity of the final images. To do this, it is enough for them to have a key — bending.

As a result, working with AI was reduced to three stages for me:

- I show neural networks 50+ pictures, describe and get a new style;

- I skip the images that need to be finalized through the style;

- the illustrator refines the resulting image (adds or removes details).

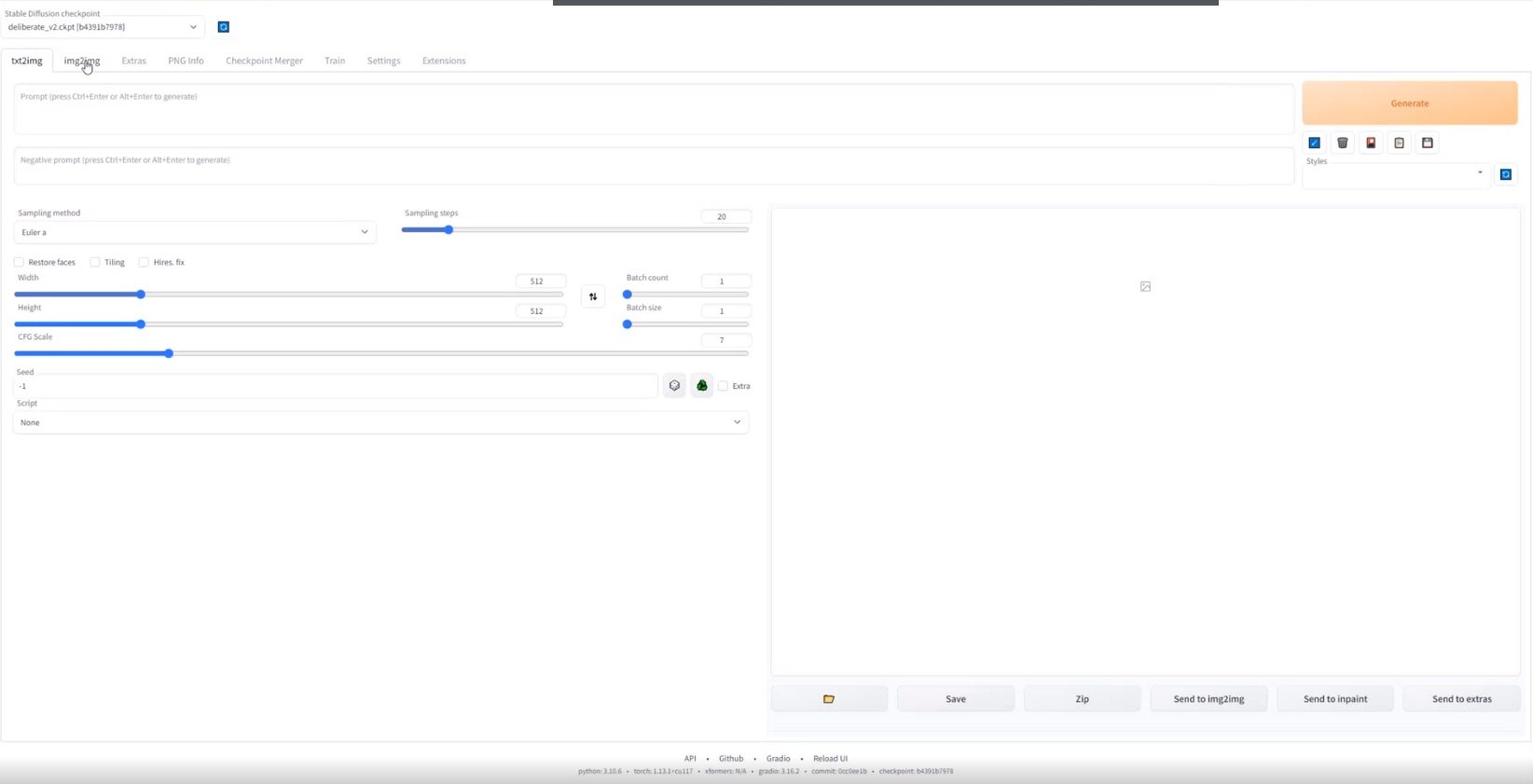

1 — the original image; 2 — the image passed through the style; 3 — the image modified by the illustrator.

Prompta is the key to interacting with AI

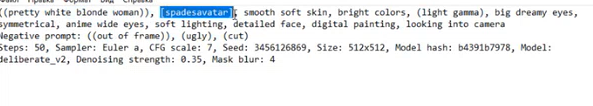

If you want to get high-quality results from the neural network, you need to get your hands on working with prompta, text queries. The final result depends on how they are formulated.

Each promt is unique, any little thing can affect the image, including even the word order used.

However, I do not recommend using exclusively prompta. Images can be exchanged with a neural network. For me personally, it's easier than explaining everything in text. For example, you need to get an image based on a specific cat.

I insert a cat, I write that I want a cat in a hat. But the probability that the AI will give the desired result at the first request tends to zero. To speed up the process, I'm sketching a hat in Photoshop.

Next, already in the AI interface, I select the area with which the neural network needs to work (the inpaint tool), and also set the necessary settings — the number of variations, the level of study and, most importantly, Denoising strength, which is responsible for how the neural network will follow my sketch (where 0 — will not make changes at all, and 1.0 — will completely ignore my sketch and draw whatever she wants).

Next, I get several variations of the cat in the hat I need and choose the option that suits me.

Another example of how a neural network speeds up the artist's work is presented below.

The 2D artist needed to make a unique UI for the event. He made a sketch, I passed it through neural networks, additionally writing in the script all the wishes of the artist. As a result, we almost immediately got an excellent basis for improvement.

Important: boxes with apples, ladders and sheep in the illustration were generated separately. This was done for a more predictable result and further flexible refinement.

Another very powerful direction is 3D. Now I have tasks to generate small rooms. The neural network copes well with interiors, but the perspective is constantly jumping during generation, and I need a specific angle. I solve the problem using ControlNet in just a couple of steps.

Stage one. I model the scene in 3D, expose objects on the scene, expose the camera, render the depth map and get this image.

Stage two. I upload the depth map to ControlNet, set the settings so that the neural network generates images based on this depth map, preserving the angle and location of objects. Next, I prescribe in the script that the AI should give out a dark, cluttered attic with a window in the roof and a cardboard box in the center of the frame. I get the following image.

After that, I refine the atmosphere in graphic editors, relying on TK. It was supposed to be an image of a sad cat who asks for help while in the attic on a rainy night in a severe thunderstorm. I get an image that I can pass to the animator for further work.

The value of AI for artists

A neural network is a tool that helps to make a database that can be completed at your own pleasure. Simply put, thanks to the neural network, artists have the opportunity to devote more time to the study of the image, rather than technical work.

A year or two ago, when I was given the task to draw 20 avatars, the process of working on them would have looked like this:

- search for references;

- preparation of sketches;

- drawing each avatar from scratch;

- completion of each avatar.

Today, the neural network has taken most of the work on itself, significantly reducing the time spent on a routine task.

If the project style is ready, then generating, for example, an avatar for the game may take me only 5-7 minutes. During this time I will get 20-30 images to choose from. It takes about an hour to generate a dozen avatars with the same number of variations.

Using the power of AI, I can single-handedly implement many more tasks than before. Therefore, it is a great tool. It is a tool, not a magic button that allegedly threatens to take away the work of artists

Neural networks complement each other perfectly. For example, Stable Diffusion works better with casual graphics, and Photoshop's neural network is good when working with realistic images. Only the result is not as predictable as in Stable Diffusion, because there are no additional settings. This is compensated by a user-friendly interface.

For example, if there is an interesting picture that is inconveniently dropped, then you can finish the missing part directly in Photoshop. This is done once or twice. In Stable Diffusion, you can get the same result, but with a little more effort.

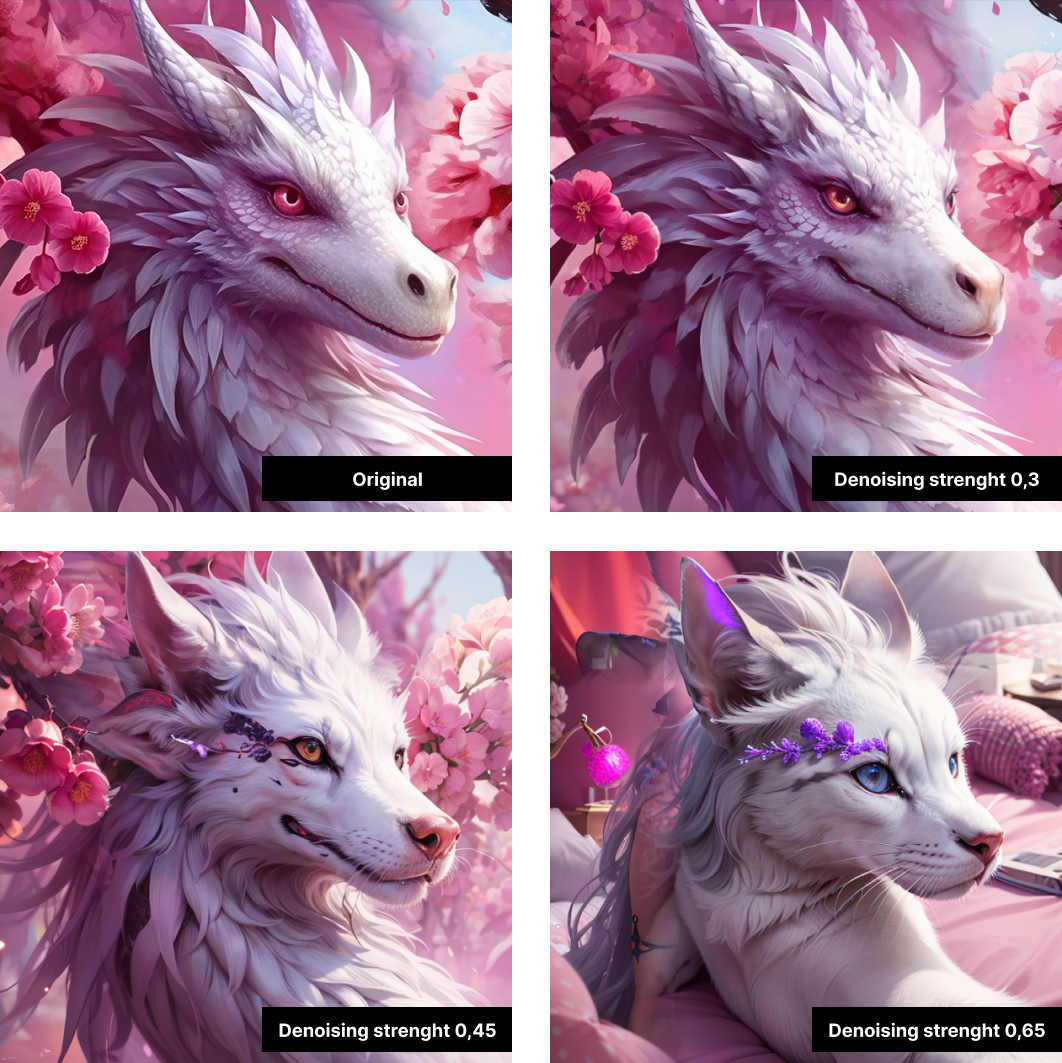

Midjourney is able to make beautiful fantasy pictures, but, unfortunately, their resolution leaves much to be desired. Therefore, in ZiMAD we use Midjourney in conjunction with Stable Diffusion to increase resolution. The coolness is that it is possible to keep the image the same, improving only the resolution or adding the necessary details, if required.

For example, a colleague with the help of Midjourney made a beautiful picture for Magic Jigsaw Puzzles, and I increased its resolution to 4k via Stable Diffusion.

In this case, the Script field helps me, I choose one of the proposed upscalers (in our case, this is LDSR), set the Scale Factor parameter, which is responsible for how many times the picture will be enlarged, and also do not forget to set the Denoising strenght parameter to near-minimum values (if you set this parameter too high, then the neural network is serious redraws the picture, the values for the upscale in our case are 0.1-0.2).

If you choose a good parameter for denoising, then the neural network will only finish the details and will not change the picture much. For example, this is how the wolf got a well-developed coat and pixelation disappeared.

Working with Stable Diffusion, of course, has drawbacks. Architecture and inscriptions are still not easy for her. Buildings can be chaotic, and the text can be completely unreadable.

But I am sure that these shortcomings will be solved over time.

Despite the difficulties that arise when working with neural networks, artists and game teams need to learn how to work with them today. Otherwise, they are unlikely to be able to remain competitive in the market.

For example, now I have a task to prepare 50 icons. Creating them manually could take me at least a week. With the help of a neural network, it is unlikely to take more than two days.

So, it is better not to resist new technologies, but to learn to interact with them effectively.