The use of Midjourney allowed the Lost Lore game studio to significantly optimize the costs of creating art. How exactly, — Evgeny Kitkin, the founder and CEO of the studio, told in his column.

Evgeny Kitkin

Right before our eyes, a revolution is taking place in the gaming industry.

Neural networks seem to have suddenly (although, in fact, it took years) learned how to create high-quality art. From now on, any game studio can significantly reduce the cost and speed up development. If, of course, he wants to adapt to the new reality and become a pioneer in using AI when creating games.

We have taken this step. At the beginning of autumn, Vlad Martynyuk, the studio’s art director, was given an R&D task: to figure out how artificial intelligence can be used to create mobile games. Our flagship project Bearverse has become a testing ground for research.

Looking ahead, I will say that the decision was correct. Today, Lost Lore is one of the first teams to use neural networks in mobile game development. Thanks to this step, we managed to significantly reduce the time and money spent on creating graphics.

How does it all work?

Just in case, I’ll start with the base. I’ll tell you a little about how, in general, work with the Midjourney neural network is usually built, which we just used to work on the Bearverse.

AI relies on promts that the user prescribes. So writing promts by itself quickly became an art that takes time to master.

Keywords are used not only to describe the plot of the future picture (what should be depicted on it), but also for the manner in which it should be generated. This can be either a general characteristic of the style, or a reference to a particular artist, era or direction in art (Baroque, neoclassicism, and so on).

Let’s take a look, for example, what the Midjourney neural network will offer us if we enter the following phrase as an argument: “character design biomechanical smile“.

Then the user chooses the option he likes and can request its variations from the neural network.

As for us, we used the neural network to create bears, among other things.

Bearverse features a variety of bear characters representing various clans and classes. Accordingly, they all differ from each other.

To create each new bear, we uploaded the bears we had already drawn as a reference, and also added a detailed promo, within which it was spelled out which new game character we wanted to get.

We also prescribed colors for the future image, things that the bear holds in its paws, the pose in which it should be depicted, the background, the desired presence of clear reflections, and so on.

Promts were usually written in the following spirit: “evil Grizzly bear in action wearing iron steel body armor skull ornament, dynamic attack pose, ancient helmet and gas mask, in post apocalyptic style, etc.”

Naturally, each new bear received its own promt. Here are the results we got:

Can these images be called perfect?

Of course not. The art created with the help of AI requires improvement. If you have an experienced graphic designer in your team, this is not a problem.

Vlad removed graphic artifacts (for example, incorrect displays of weapons, eyes and paws, with which neural networks have eternal problems), and also added important details, corrected shadows when necessary, drew snow.

The final design of each bear was processed and “approved” by an experienced specialist.

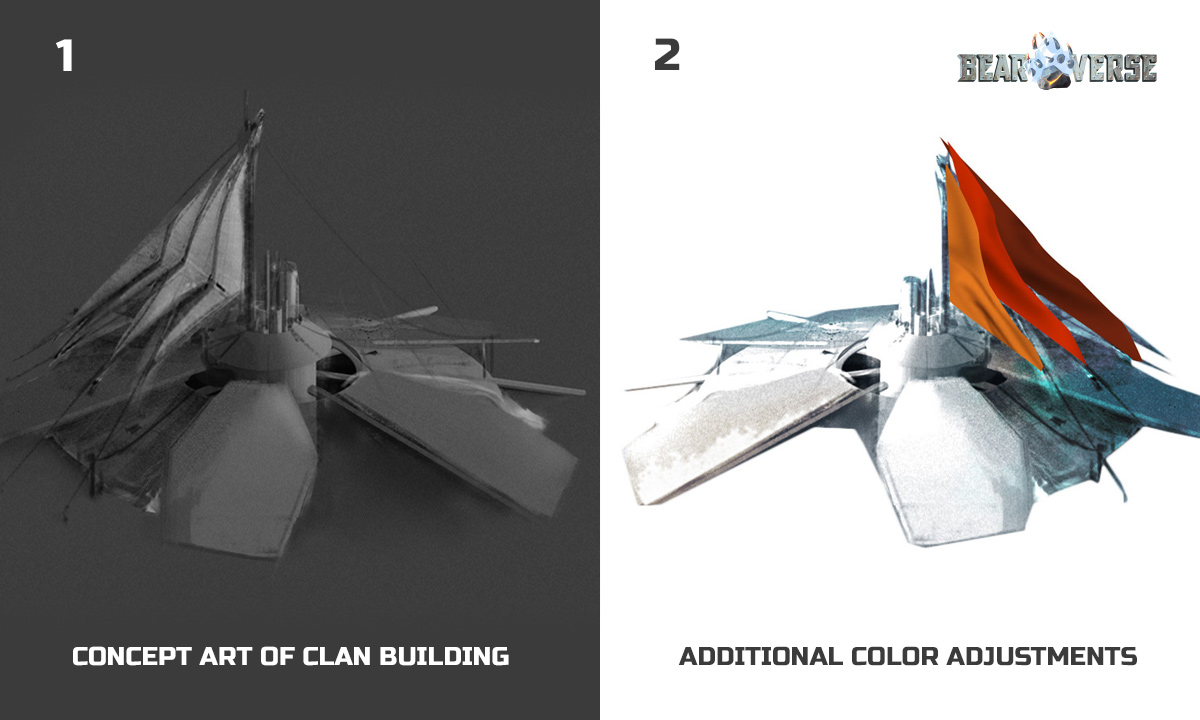

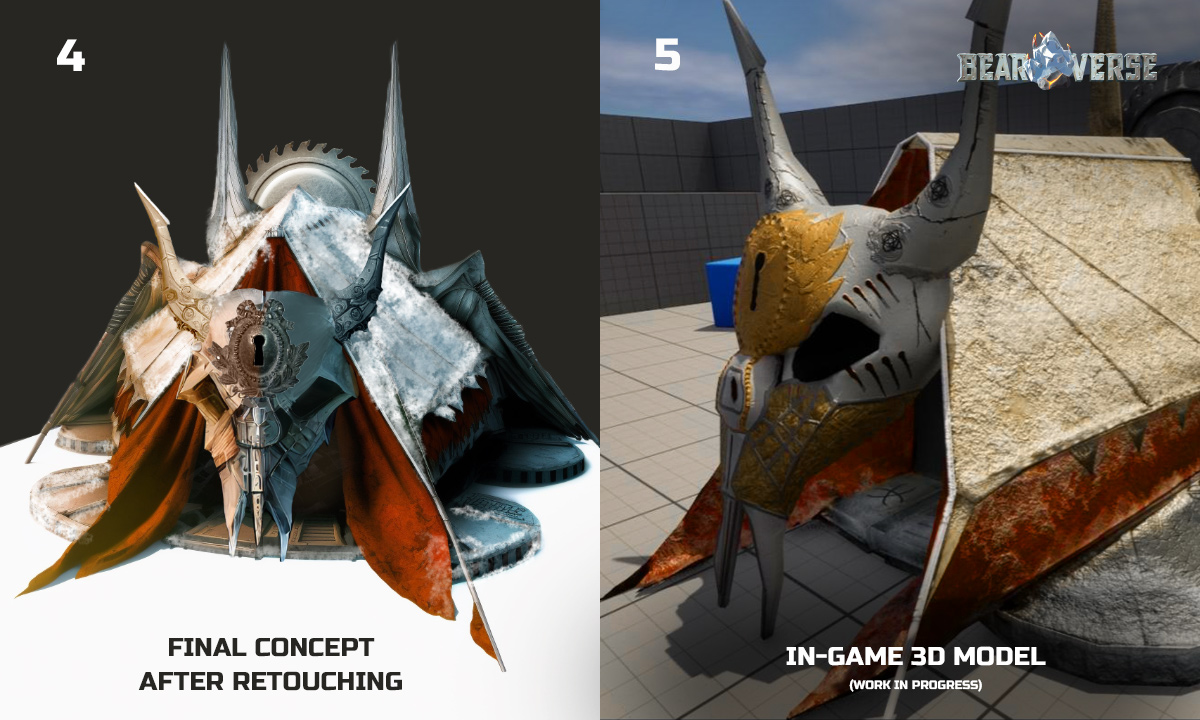

We also prepared the concepts of buildings using a neural network.

For example, we uploaded a 3D rendering of the tent made by us as a reference (several images of it from different angles in an isometric projection), and also added, as in the case of bears, a print with a detailed description.

The neural network, among other things, had to start from the following words: “Biomechanics style post-apocalypse“. But this time, not everything went smoothly, so Vlad had to take longer to finalize the concept, on the basis of which we were already assembling a three-dimensional in-game model.

What else?

Another point that is extremely important for any mobile game development studio: you can feed AI promts to generate game location concepts.

It is important to keep in mind that in development — this is quite a costly part. Creating two-dimensional concepts requires the presence of several experienced professionals in the team. And each of them can take from one to a couple of weeks to create a concept for one location. With the help of a neural network, an experienced designer will be able to cope with this task in a day.

Of course, AI will not be able to create a map or a location in its final form. You won’t be able to take it and insert it into the game. However, we will get the design of a large number of objects, content, and this is very important. Especially if we are talking about games where there is no direct interaction with locations (as was the case in our case).

Business Application

As mentioned above, the use of AI increases the efficiency of illustrators and designers. Proper use of technology can speed up development dozens of times.

For example, when developing Bearverse, our art director took less than a week to create and correct 17 characters, as well as a number of UI elements.

And what happened before?

Work on one character took about 16 hours, that is, two working days.

In other words, it would take us 34 working days to create 17 playable characters. Most likely, we would also have to involve several additional specialists to work on them.

In general, we calculated that using AI allows you to reduce costs by 10-15 times with comparable results.

In light of all this, we now intend to use neural networks not only to develop our own games, but also to create graphics for projects that we are engaged in as an outsourced contractor.

Conclusions

As the CEO of a game development studio, I have to think not only about the quality of our games, but also about the cost-effectiveness of all processes.

Neural networks are a revolutionary tool that will undoubtedly change the gaming industry.

In our case, if we used the classic approach of working on graphics, creating concepts for 100 characters would cost the studio $50 thousand and six months of work.

Using AI has reduced the development cost to $ 10,000 (80% cheaper), and the development time to one month (six times faster). These are impressive results.

In general, when using a neural network, game development becomes:

- cheaper (profitability goes into space);

- faster (significantly reduced development time);

- easier (AI itself is ready to throw up possible options when working with art);

- less risky (prototypes are created and tested faster, which means hypotheses are tested faster, which increases the chances of success);

- more profitable (offering content more often, you can earn more);

- more reliable (the neural network will never get sick and tired, the human factor is reduced to nothing).

It is also important to understand that a small team of professionals is enough to work on games using neural networks. And it is much easier to build stronger and clearer relationships with such a team.

I believe that the CEOs of game studios today should push their graphics specialists to learn how to work with AI. Those who are the first to learn how to work on graphics with a neural network will be at the top of the industry.

If you have an interest in this topic in particular or in the mobile game development industry in general, I will be glad to talk.