Eric Surfert, Marketing Director of Wooga, told in his blog why you should not focus on data collection and analysis.

In the summer of 2013, Zynga laid off 520 people. One of the former employees of the company launched a thread on Reddit the day after his dismissal. Two posts in the discussion caught my attention.

Post 1

I think the idea of collecting all the information about what the user is doing in the game is wonderful. But over–reliance on this data is not so wonderful anymore. This makes the development more analytical work, depriving it of clarity. When the game brings pleasure and joy, you can easily say that the game is cool. According to analytics, you can’t say so unequivocally about this.

Post 2

It seems that the company has decided to abandon innovation in favor of a strategy of “doing what worked before.” I’m not sure that a lot of her games have passed A-B testing over the past year in order to find cool new mechanics. But the company succeeded in collecting data.

These posts led me to believe that Zynga is incurring “hidden costs” from A/B testing. In other words, the company ran into the pitfalls of such testing.

The obvious costs of A/B testing are obvious: A/B tests take time to create, implement and evaluate, and this time could have been spent on completing the product. In fact, during such a test, the development of individual components of the game is suspended. Often, the obvious costs of A/B testing are justified by refusing to participate in complex testing in the future. But I think that these expenses are overestimated.

If it takes more than 30 minutes to create and implement A/B tests, it simply means that the company does not allocate sufficient resources to analyze the A/B testing infrastructure using internal means. But this is not a problem, because there are services like Swerve that provide such services.

They are constantly testing and optimizing something. And the costs of such tests in such companies are minimal.

But the “pitfalls” of A/B testing are a completely different matter. They are born of a constant dependence on incessant, incremental changes. This excessive dependence is blinding, because of it, the company may stop noticing fundamental flaws in its products.

Incremental changes cannot be called absolutely immaterial. As a rule, they take the form of single–digit variables that improve the game process – whether they are conversion from a landing page, total revenue, a long session, and so on – and they can eventually give the product an advantage in the market.

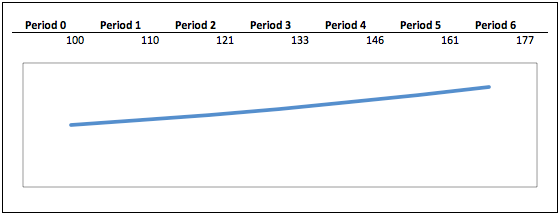

The straight line just below shows a 10% increase in the indicators of a particular process.

But obsessing over such minor improvements can distract the team from the real problems of the product. And this is not an opportunity cost, often the question can even be posed like this: “should we choose between A/B testing or eliminating structural flaws in our product”. And it is a mistake to consider the correction of incremental shortcomings as a panacea for all ills.

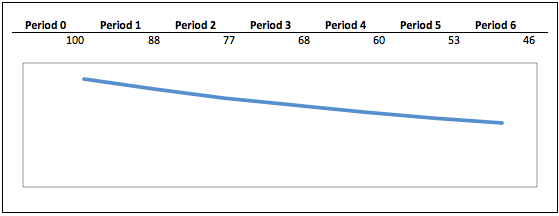

In the graph below, you can see that a 10% increase in the indicators of a particular process took place against the background of a 20% drop in the total indicators of the product.

But be careful. The consequence of using A/B testing may not only be an incorrect allocation of resources and efforts. The real problems begin if you believe that working on small improvements can replace the development of the product itself. A/B testing a weak product is the same as going to a rocking chair with a gunshot wound.

Companies that focus on data collection and tracking user actions sacrifice growth at the macro level for minor improvements in micro-level processes that do not mitigate the fundamental problems of the project and even accelerate its metastases.

A/B testing is a tool, not a development strategy. It should be used to bring even more pleasure to those users who are already enjoying the product, and not to convince them that the game is much better than they think.

A source: http://mobiledevmemo.com