At WWDC 2019, Apple introduced a number of new tools for developers. We briefly talk about RealityKit, Reality Composer, SwiftUI and many others.

The audience was skeptical about the introductory presentation to WWDC. We didn’t expect much. Contrary to forecasts, Apple managed to present a few surprises. Some of them directly concern developers.

Important: there are no global revolutions, most of the innovations related to the toolkit are aimed at simplifying the development and improving the performance of previously presented system features.

The announcements presented or related to the development can be divided into three semantic parts:

- augmented reality;

- development environment;

- changes related to the new OS.

Augmented Reality

Speaking about working with augmented reality, Apple has focused on three tools.

RealityKit

This is a framework that was created to bring the most realistic objects and effects to the augmented reality stage, as well as to calculate their animation and physics. The key feature of RealityKit is called photorealistic rendering of objects.

Simply put, its task is to make the objects superimposed on the real environment in AR look realistic and work as correctly as possible.

Despite the fact that there are still few cash-based AR applications, Apple continues to believe in the platform

Another feature of the framework is automatic adjustment to the capabilities of a specific iOS device.

The developers assure that it will be enough to make one version of the AR object. If a particular device does not pull, RealityKit adapts it to the capabilities of the gadget.

RealityKit runs on the Swift API, some of the basic features can be implemented through ready-made code templates.

By the way, at the presentation itself, Apple focused not so much on the photorealism of the RealityKit render, as on the fact that it simplifies the development of AR applications. They say that now creating such programs does not require deep knowledge of three-dimensional modeling and skill in working with complex game engines.

Reality Composer

This is the name of an application that allows you to create interactive AR scenes without using code and using a visual interface. You can work with Reality Composer on both Mac and mobile devices.

The novelty looks much simpler than any three-dimensional modeling package. The user simply uploads USDZ files (this is a young Apple format for three-dimensional objects), places them on the scene, sets them the desired parameters and how they should react to user actions.

Reality Composer looks like a very simple toolARKit 3

Apple itself characterizes ARKit as a set of camera and movement functions integrated into iOS. The new version of this set has learned to correctly display the position of a person on the AR-scene.

Simply put, depending on where a person is in real space, ARKit 3 calculates which objects in augmented reality are behind him, which are next to him, and so on.

The main nuance is that there are still no glasses that are ready to display AR qualitatively

ARKit also allows you to capture movements (Motion Capture) in real time and, accordingly, read them to control virtual objects.

That is, if the user raises his hand, ARKit 3 sees it and commands the virtual alter ego to also raise his hand.

In addition, ARKit 3 has learned to track up to three faces at the same time, identify surfaces in space faster, better determine the presence of obstacles in real space, and so on.

Apple showed new features of ARKit 3 using the example of the new AR version of Minecraft – Minecraft Earth. At the presentation of the game, two Mojang developers simultaneously interacted with one virtual stage: they built a fortress on it and fought monsters. Moreover, at a certain point they placed themselves on the stage, which correctly interacted with them.

The Minecraft Earth show is one of the most impressive presentation eventsDevelopment environment

SwiftUI

The main purpose of this framework is to allow developers to create applications by writing less code when working with the basic functions of the system. It is assumed that when writing an application using SwiftUI, developers will focus on the original functionality, and standard things will work almost out of the box (we are talking about automatic support for Dynamic Type, Dark Mode, reuse, connection of localizations, and so on).

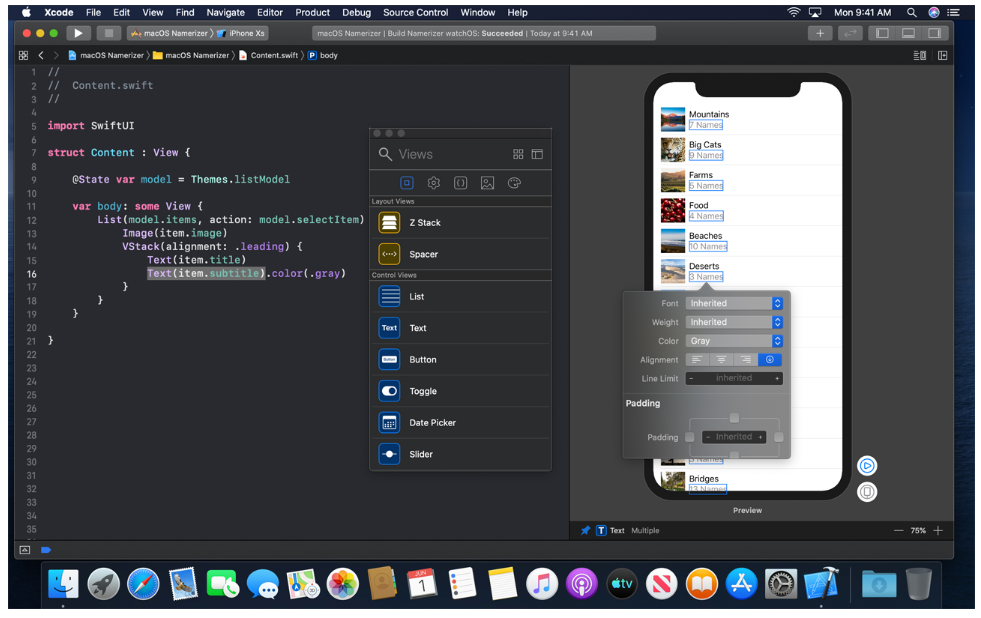

An important feature of SwiftUI is how it works with the updated Xcode 11. The new visual interface of the latter allows you to solve some of the tasks related to creating a UI, using code at a minimum.

Apple strives to simplify the development of applications on Swift as much as possible

The developer can create an interface by simply dragging objects.

The code will adjust to the changes immediately. All changes in real time will be uploaded to the build.

Changes related to new operating systems

Simplify the conversion of iPad apps to Mac apps

The iPad OS was introduced at WWDC 2019. This is a new operating system for Apple tablets. Now it is an intermediate link between iOS and macOS. One of the consequences of the new OS was the simplification of porting applications created for iPad to Mac.

Apple assures that it is now very easy to make a native application for Mac. It is enough to put a check mark in the “Mac” checkbox in the settings of the iPad application project within Xcode 11. Accordingly, from now on, applications for both platforms will share a single project and a single code.

Asphalt 7 was demonstrated as one of the examples of successful porting from iPad to MacAbility to develop independent apps for Apple Watch

watchOS 6 was also announced. Its key feature is support for independent applications.

Previously, any watch app required an iPhone app to work correctly. In fact, all calculations took place on a smartphone and then were broadcast to the watch screen.

Now Apple watches are able to record sound on their own, among other things

Now the need for this has disappeared.

Thanks to the new operating system, Apple Watch can run applications on its own. And developers can create programs directly for the watch. They no longer need to waste time on companion apps for the iPhone.

Moreover, the watch will have its own App Store.

What else?

In short, among the important innovations it is also worth noting:

- Adding support for third-party audio applications to SiriKit. Now developers will be able to make users control audio using voice (if you are preparing an audio game, this will come in handy).

- Updating Core ML (Integrated Machine Learning Models) to the third version. The most important thing in the update is the ability to update learning models taking into account the already generated data on the user’s device. Simply put, adding new ML features to the application will not entail a situation where a familiar application will start learning from scratch and offer irrelevant things to the user.

Also on the topic: