We continue to publish transcriptions of talks from the "Gaming Industry" conference. Up next is a presentation by Aliona Karasevova, Head of Testing at Gost Team. She discussed the issues most frequently encountered when testing gaming products.

In this talk, I will share case studies from my experience.

But first, a bit about myself. I entered IT 25 years ago when I was a student at an engineering faculty. I've been in the game development industry for 20 years, focusing on testing for 15 of those years.

At Gost Team, I built the QA department from scratch and expanded it to 250 employees.

My team and I have been involved in testing games (including AAA titles and applications aimed at children) and various non-gaming solutions (online cash registers, online cinemas, etc.).

This talk will discuss:

- working with requirements;

- testing strategy development;

- creating test documentation.

I won't delve deeply into the testing itself; it's an extensive topic.

Working with Requirements

This is probably the most challenging phase for any testing team as it involves the most communication.

In this phase, it is essential to:

- discuss which elements need testing;

- specify the types of testing required;

- not miss a single detail or lose communication;

- identify and resolve any ambiguities or contentious points when reviewing requirements.

Often, the first problems arise at this stage.

I have encountered situations where the team commissioning our testing had no project documentation. Sometimes, I was told the documentation was in the head of an expert who left a few months ago.

As a result, we have to analyze the project ourselves, prepare documentation, and then ask the client for clarifications on each element or feature to ensure our understanding is correct.

Sometimes documentation exists but is a collection of separate documents held by various specialists in different formats and degrees of completion. This typically occurs when the client lacks a unified platform for documentation. The work often proceeds like this:

- part of the documentation is being developed by one expert;

- another part by a different expert;

- once any expert finishes a document, they share it in a centralized communication channel;

- eventually, the team receives several files with the same name but different content.

The next issue is the roadmap.

It's crucial to understand that a roadmap is a benchmark or guideline, primarily aimed at management.

It's common to receive a roadmap document, only to find out that timelines have shifted, a different feature is in development, and the document hasn't been updated.

Another problem I encountered at the requirements stage is the undefined gaming platform.

For instance, a client might come to us saying, "Here's a game; you need to go through the tutorial, explore the map, collect loot, eliminate enemies, observe any defects, and document them in bug reports." You start asking how certain features work due to the absence of project documentation. You reach out to developers to determine how each element should function. It turns out that the game requires more than just functional testing. Additionally, system requirements need to be established, performance tested, and most importantly, regression testing conducted.

Why is regression testing necessary? Because the product is in active development, with sprints introducing and testing new features, but the functionality of the old ones is neglected — regression testing isn't included.

What are the possible solutions?

Primarily, a unified platform for documentation and data storage must be selected.

Collaborative work on documentation simplifies the tasks of all departments: analytics, marketing, and, of course, the QA department, whose staff can immediately see feature owners and their descriptions.

It is vital to facilitate documentation continuously. Maintaining it allows identifying discrepancies in testing requirements.

It's common to have, say, 25 sections in project documentation. Section N1 describes a feature, while Section N25 references the same feature but with a different description.

One of the tasks of the testing department is to analyze such cases, identify discrepancies, clarify how things should be, and inform the feature owner of inconsistencies.

This way, the QA department identifies functionality defects and aids in adjusting the roadmap to understand which aspect to focus on at the moment. Resources and their allocation are adjusted accordingly for each task.

All this leads to the next stage.

Developing a Testing Strategy

This is a critical stage for any team because it determines the resources needed for development.

We've encountered situations where a game in release had new features monthly, but there was no testing plan or understanding of what needed testing.

Sometimes, clients allotted only two to three weeks for QA before the release. During testing, it turned out the game had numerous critical errors, forcing the team to delay the release.

How to avoid this?

Clearly understand what types of testing are needed and why within the development cycle, and prioritize them.

For example, we were once asked to conduct performance testing during prototyping. It was not economically viable, as the project's goal was to attract investors and secure additional development funds. We explained this to the client and advised focusing on functional testing.

Additionally, developing a test plan is recommended to determine the testing budget.

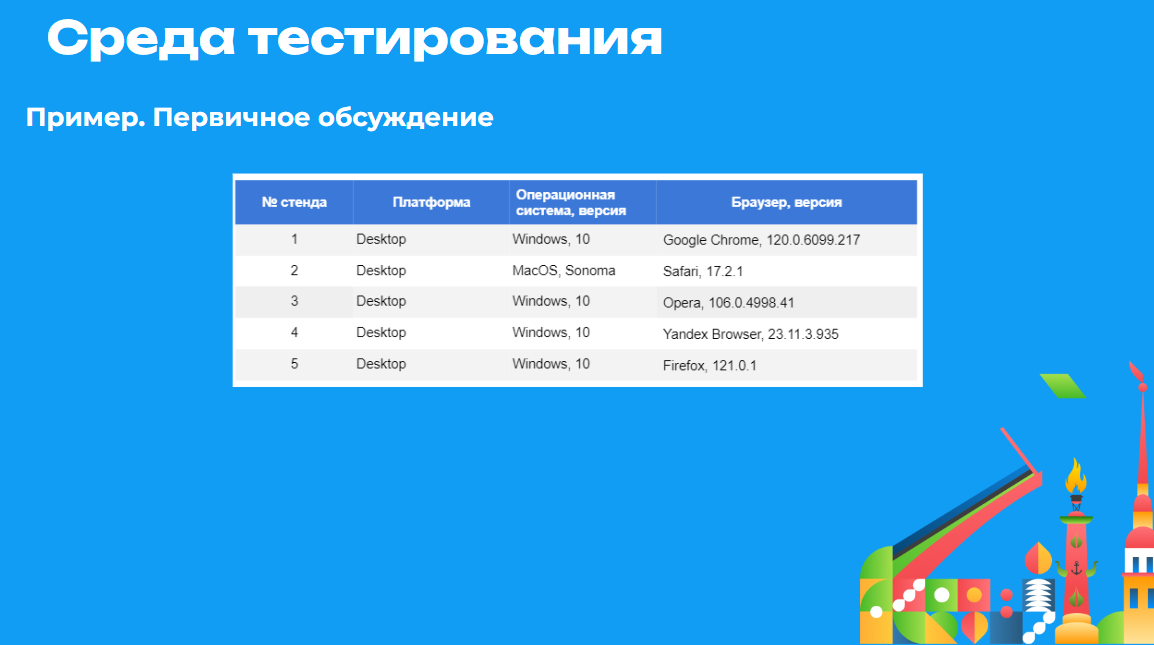

The next stage, which significantly impacts budgeting within the test plan, is determining the testing environment.

As I mentioned earlier, clients often overlook which platform or equipment should be used for testing.

For instance, there was a case where a client reached out about a web project. They anticipated their game mainly running on desktop devices, so they wanted to test it only in a few browsers on Windows and Mac.

During discussions, it was found the client wasn't aware that a significant portion of web traffic now comes from mobile devices. Consequently, our configuration range doubled. There was a need to test the game on mobile devices.

In my practice, clients often wanted to save money. They suggested conducting tests with the minimal possible configuration, asking the specialist to conduct both functional testing and assess performance simultaneously.

What was the outcome?

The tester launches the game on minimal specs. It takes three minutes to load, and its performance resembles a slideshow. The specialist catches freezes instead of bugs, prolonging testing time and increasing its cost.

The takeaway: don't skimp on the testing environment. Yes, devices with minimal configurations should exist but as separate test stands where, say, performance checks can be conducted every two weeks.

We now move to the work with testing tools.

It's challenging to select tools at the project's start due to sanctions (uncertainty if a tool will function in the country after six months or a year) and typically high costs.

Talking about costs was not random. I’ll share one of our cases. About three years ago, a development team contacted us, needing to test compatibility and performance of a product for a year. They set approximately 100 hours for this testing, wanting to use a specific niche tool that provided game measurements. The tool's license cost $5,000.

What happened next?

The company spent money on 100 hours of testing and purchasing this tool. At year-end, we provided a report, but the team didn't need it.

Hence, I recommend weighing the pros and cons before collecting any data and spending money, questioning their necessity.

Moreover, given the sanctions, I advise considering domestic tools to mitigate risks.

Testing

The testing phase is multifaceted, comprising many steps.

I would like to highlight quality management, particularly the issues our team identified during gaming product testing.

We often encountered undefined product quality criteria. We had to create a verification matrix, determining what blocks the project's release.

Another issue is the lack of parallel development and stabilization branches. It should be: feature development occurs in the development branch. Post-release, the build with the new feature should move to the stabilization branch. Unfortunately, many developers skip this.

Also neglected is maintaining a decision table. This table records which objects need testing and which have already been tested.

We've previously discussed ignoring regressions. In active development phases, teams often lack time to revisit and check other game content parts. New features get more attention, potentially harming others. To prevent this, allocate time and resources for regression testing.

Some focus solely on functional testing, forgetting the game must be engaging. This is where focus testing comes in. It should also be included in the plan. Identify the target audience and determine if the functionality appeals to the intended user.

A common issue is developers overlooking test documentation updates. Many assume it's not crucial, lacking time, preferring to fix bugs first. Documents remain outdated for months, then years. When a new specialist arrives, documentation often doesn't reflect reality, creating confusion about where to start.

It's important to review test plans regularly because projects evolve. Any new content or features require changes in the test plan.

I frequently encounter clients who don't analyze received reports. The reason is unclear, possibly due to fear of delaying the release. We sometimes have to remind them: "Pay attention, there are these problems that need fixing."

The next quality management issue is communication.

Recently, there was a case: the testing team spent five out of eight project hours on calls and discussions, affecting timelines.

I've also seen teams involve everyone — developers, analysts, marketers, designers — in every call, wasting significant time on minor discussions.

However, there were also cases of complete disengagement. Teams refused to call or discuss anything, which was equally detrimental. Management didn't understand the product status. Testers didn't grasp how game elements worked. Developers were unclear about their tasks and fixes.

To prevent this:

- clarify team communication channels and formats;

- pre-determine which topics will be discussed in which meetings and with whom.

Communication time will decrease, and tasks will be resolved more quickly. There will be no more half-day meetings on tasks.

Moreover, well-detailed bug reports are crucial. The more detailed they are, the fewer questions arise from feature developers.

That's all from me. Thank you very much for your attention.